Organizations are generating vast amounts of data every day. From transactional data to user interactions, this data holds immense value that can drive business decisions, improve customer experiences, and ultimately fuel growth. However, harnessing the power of this data is only possible if you have a solid data architecture in place.

- Understand the Importance of Data Architecture for Analytics

- Key Components of Data Architecture for Analytics

- Scalability and Flexibility

- Security and Compliance

- Data Governance

- Best Practices for Building Data Architecture for Analytics

- Conclusion

Building a good data architecture for analytics is crucial for extracting actionable insights from your data. It involves planning how data will be collected, stored, processed, and accessed to enable efficient and scalable analytics. But with so many moving parts and technology choices, it can feel overwhelming. This blog will guide you through the essential steps for creating a robust data architecture for analytics, ensuring that your data infrastructure can handle the increasing demands of modern analytics.

1. Understand the Importance of Data Architecture for Analytics

Before diving into the technical aspects, it’s important to understand why good data architecture is critical. A well-designed data architecture ensures that:

- Data is accessible and reliable: The right people can access the right data at the right time.

- Data is high-quality: Data quality and integrity are maintained, reducing errors in analysis.

- Scalability: As data grows, your architecture should be able to scale seamlessly.

- Security and compliance: Protect sensitive data and ensure compliance with regulations such as GDPR.

- Performance: Analytics queries run efficiently and return insights quickly.

Without a robust data architecture, organizations often face challenges such as slow query performance, data silos, poor data quality, and difficulty scaling. The ultimate goal of a good data architecture is to make your data an asset that drives better decision-making and business outcomes.

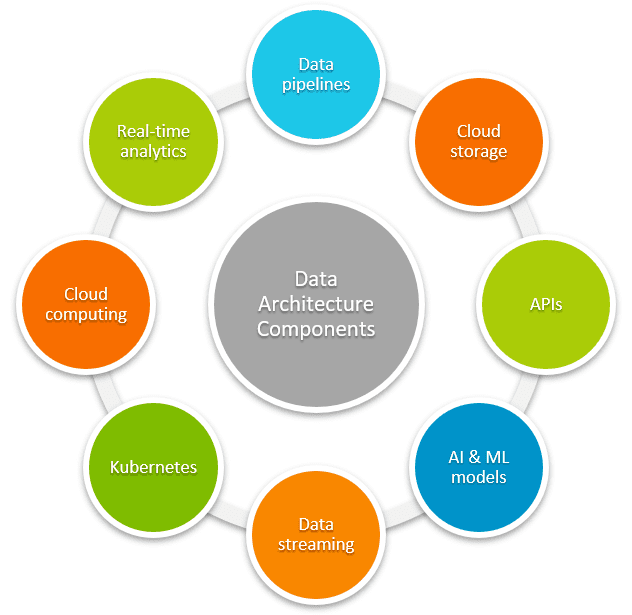

2. Key Components of Data Architecture for Analytics

To build a strong data architecture, you need to break it down into key components that work together seamlessly. These include data sources, data storage, data processing, data pipelines, and analytics tools. Let’s explore each component.

a. Data Sources

The foundation of any data architecture begins with the sources of data. These can range from internal systems like CRM, ERP, or transactional databases, to external sources like social media, IoT devices, or third-party APIs. Understanding your data sources and how to integrate them is crucial.

- Internal Data Sources: Often structured, these include databases, logs, application data, and files. They are easier to manage and integrate, but they can also create silos within the organization.

- External Data Sources: These are often unstructured or semi-structured data sources like social media posts, sensor data, or external APIs. While valuable, they require more effort to parse and analyze.

Building a good data architecture means having clear strategies to integrate data from both internal and external sources in a way that makes sense for your analytical goals.

b. Data Storage

Once data is gathered from various sources, it needs to be stored in a way that is efficient, scalable, and secure. There are two main approaches for data storage in analytics architectures:

- Data Warehouses: These are designed for structured data and are optimized for analytics queries. They can store large volumes of historical data and support complex analytics operations. Cloud-based data warehouses like Amazon Redshift, Google BigQuery, and Snowflake are popular choices for modern data architectures due to their scalability and performance.

- Data Lakes: A data lake is used to store raw, unstructured data that may not have a clear purpose yet. It’s typically used for storing big data that doesn’t fit into traditional database structures, such as logs, images, and videos. Tools like Amazon S3 or Azure Data Lake are commonly used for this purpose.

The choice between a data lake and a data warehouse depends on your use case. In many modern architectures, a lakehouse approach, which combines the best of both worlds, is also gaining popularity.

c. Data Processing

Data processing involves transforming, cleaning, and preparing data for analytics. This is where much of the heavy lifting happens. Raw data collected from various sources is often incomplete or messy and needs to be processed before analysis.

- ETL (Extract, Transform, Load): This is the traditional approach, where data is extracted from source systems, transformed into the correct format, and then loaded into the data warehouse.

- ELT (Extract, Load, Transform): In the ELT process, data is first loaded into the data lake or warehouse, and then transformations are performed on-demand. This approach is gaining traction, especially with cloud-based solutions, due to its flexibility and scalability.

Automating data processing using ETL/ELT pipelines can help streamline the process, ensure data consistency, and improve the overall performance of the analytics system.

d. Data Pipelines

Data pipelines are the workflows that move data from one stage to another. They enable data processing, transformation, and loading into storage or analytical tools in an automated and repeatable way. A good data pipeline architecture ensures that data flows smoothly between various components, such as data sources, storage, and analytics tools, with minimal disruption.

Data pipelines can be batch-based (where data is processed in chunks) or real-time (where data is processed continuously as it arrives). Real-time pipelines are particularly important for time-sensitive analytics, like fraud detection or monitoring customer activity.

e. Analytics Tools

Once your data is processed and stored, it’s time to make sense of it. Analytics tools are what you’ll use to query, visualize, and analyze your data. This could include:

- Business Intelligence (BI) tools: These are used for visualizing and reporting on data. Popular tools include Tableau, Power BI, and Looker.

- Data Science Platforms: These tools are used for more advanced analysis and predictive modeling. Examples include Python-based environments, R, or machine learning platforms like Databricks or Google AI.

The key is to choose tools that align with your organization’s needs and user capabilities. The right tools will make it easier to create dashboards, generate reports, and discover insights from your data.

3. Scalability and Flexibility

Scalability is a fundamental consideration when building a data architecture. As data volumes increase, you need a system that can grow without compromising performance. Modern cloud-based architectures excel at this by offering flexible storage and compute resources that can scale dynamically.

Additionally, your data architecture should be flexible to accommodate changing business needs. As new data sources emerge or new analytics tools are introduced, your architecture should be able to handle these changes with minimal friction.

4. Security and Compliance

Data security and compliance should never be an afterthought. With growing concerns about data breaches and increasing regulatory requirements, it’s important to build security into your data architecture from the start.

- Data encryption (both at rest and in transit) helps protect sensitive information.

- Access control ensures that only authorized users can access certain types of data.

- Auditing and monitoring can help track data usage and prevent unauthorized activities.

- Compliance with regulations such as GDPR, CCPA, or HIPAA should be part of the design, ensuring that personal and sensitive data is handled according to legal standards.

Security and compliance will not only protect your data but also build trust with stakeholders and customers.

5. Data Governance

Data governance is about managing the availability, usability, integrity, and security of your data. It involves setting policies and practices for ensuring the quality and consistency of your data. A well-governed data architecture helps eliminate data silos, improves data quality, and reduces the risk of poor decision-making based on bad data.

Key aspects of data governance include:

- Data quality management: Ensuring that the data used for analytics is clean, accurate, and consistent.

- Data stewardship: Assigning responsibilities to ensure that data is properly managed and used across the organization.

- Data lineage tracking: Knowing where data comes from, how it’s transformed, and where it goes.

6. Best Practices for Building Data Architecture for Analytics

Here are a few best practices to keep in mind when building a data architecture for analytics:

- Start with the end in mind: Understand what kind of analysis or insights you need before designing your architecture.

- Choose the right tools: Select tools that are aligned with your organization’s needs, scale, and data volume.

- Focus on data quality: Make sure your data is clean, consistent, and accurate. Poor data quality will lead to misleading insights.

- Automate where possible: Automating processes like data integration, transformation, and monitoring can save time and improve efficiency.

- Iterate and improve: Data architecture isn’t static. As technology and business needs evolve, so should your data architecture.

Conclusion

Building a good data architecture for analytics requires careful planning, the right tools, and a focus on scalability, security, and governance. By considering the key components—data sources, storage, processing, pipelines, and analytics tools—you can create an infrastructure that supports the needs of your business and enables powerful, data-driven decision-making. With the right architecture in place, you’ll not only be able to make better decisions today but also scale and adapt for the challenges of tomorrow.